“Digitale Wissenbissen": Generative AI agents - One job, one bot

In this episode, we delve deep into the world of Agentic AI and explore whether specialized AI agents can revolutionize business processes by handling complex tasks autonomously and efficiently.

"Digitale Wissensbissen": The future of data analysis – A conversation with Christian Schömmer

Data Warehouse, Data Lake, Data Lakehouse - the terms are constantly escalating. But what do I really need for which purpose? Is my old (and expensive) database sufficient or would a “Data Lakehouse” really help my business? Especially in combination with Generative AI, the possibilities are as diverse as they are confusing. Together with Christian Schömmer, we sit down in front of the data house by the lake and get to the bottom of it.

"Digitale Wissensbissen": Generative AI in Business-Critical Processes

After the somewhat critical view of generative AI in the last episode, this time we are looking at the specific application: can generative AI already be integrated into business processes and, if so, how exactly does it work? It turns out that if you follow two or three basic rules, most of the problems fade into the background and the cool possibilities of generative AI can be exploited with relatively little risk. We discuss in detail how we built a compliance application that maximizes the benefits of large language models without sacrificing human control and accountability. (Episode in German)

Bleeding Edge - curse or blessing?

We rely on bleeding edge technologies to drive companies forward with innovative solutions. That's why, in discussions with customers and partners or in our webinars, we are always keen to explain the benefits and possibilities of modern technologies to companies. But we also use AI ourselves: by automating tendering processes, we have been able to save valuable resources and increase efficiency.

Cloud Migration: A double-edged sword ("Digitale Wissensbissen")

We see so many cloud migrations nipped in the bud because of the simple “lift & shift” solution being propagated. In this episode, we explain why this approach, while attractive, actually leaves out all the cloud benefits and instead usually results in huge cost increases. Of course, we also discuss how to do it better.

Current AI is like a 12-year-old colleague

The technology must be embedded in the process and adapted to the process and not float next to it unconnected. This also applies to large language models - although their "humanity" gives the impression that they can be treated like a colleague rather than a tool. But how many business areas benefit from a colleague with the intellectual capacity of a twelve-year-old?

ChatGPT "knows" nothing

Language models are notoriously struggling to recall facts reliably. Unfortunately, they also almost never answer "I don't know". The burden of distinguishing between hallucination and truth is therefore entirely on the user. This effectively means that this user must verify the information from the language model - by simultaneously obtaining the fact they are looking for from another, reliable source. LLMs are therefore more than useless as knowledge repositories.

Rundify - read, understand, verify

Digital technology has overloaded people with information, but technology can also help them to turn this flood into a source of knowledge. Large language models can - if used correctly - be a building block for this. Our "rundify" tool shows what something like this could look like.

ChatGPT and the oil spill

Like with deep learning before, data remains important in the context of large language models. But this time around, since somebody else trained the foundation model, it is impossible to tell what data is really in there. Since lack of data causes hallucinations etc. this ignorance has pretty severe consequences.

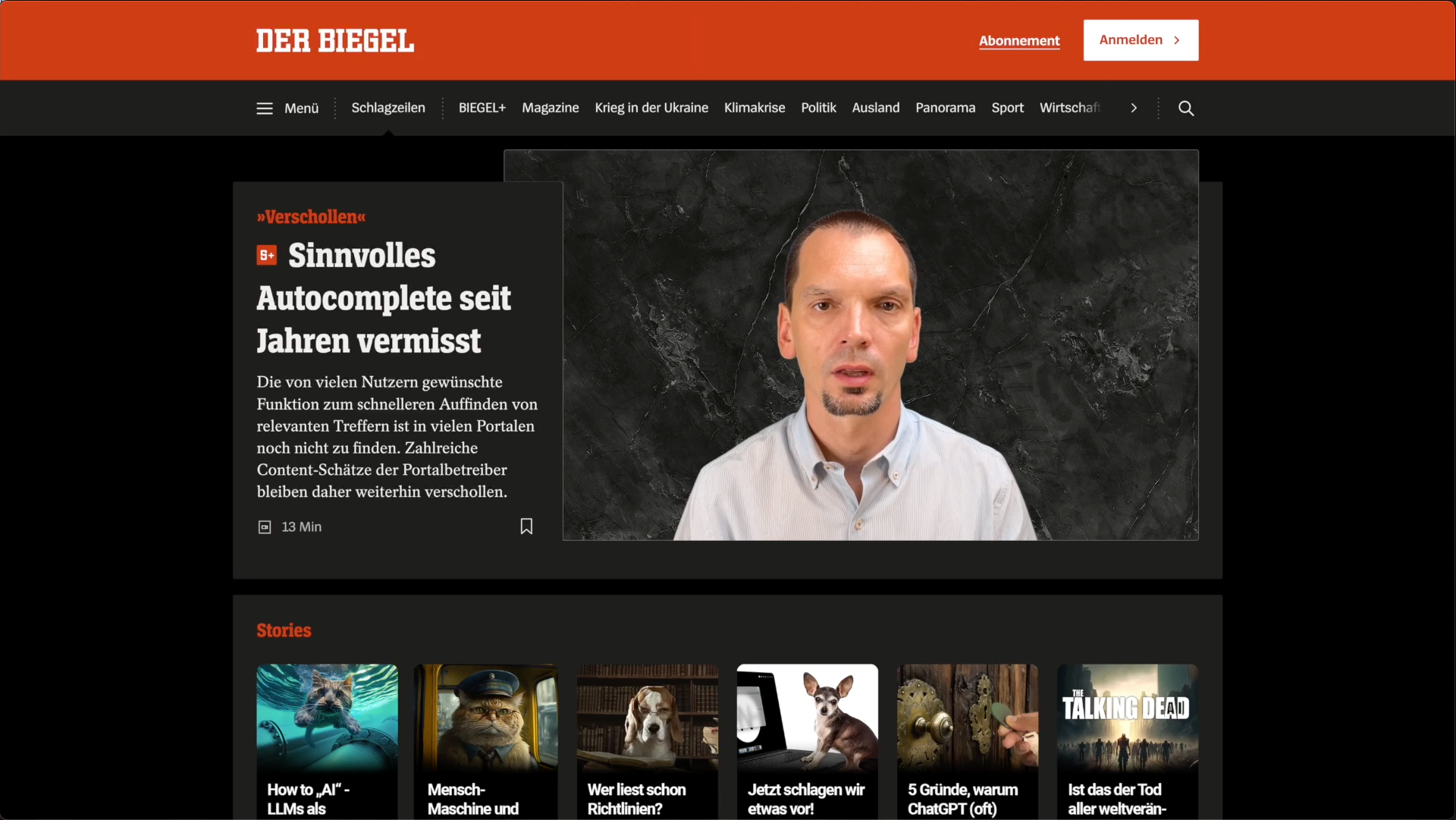

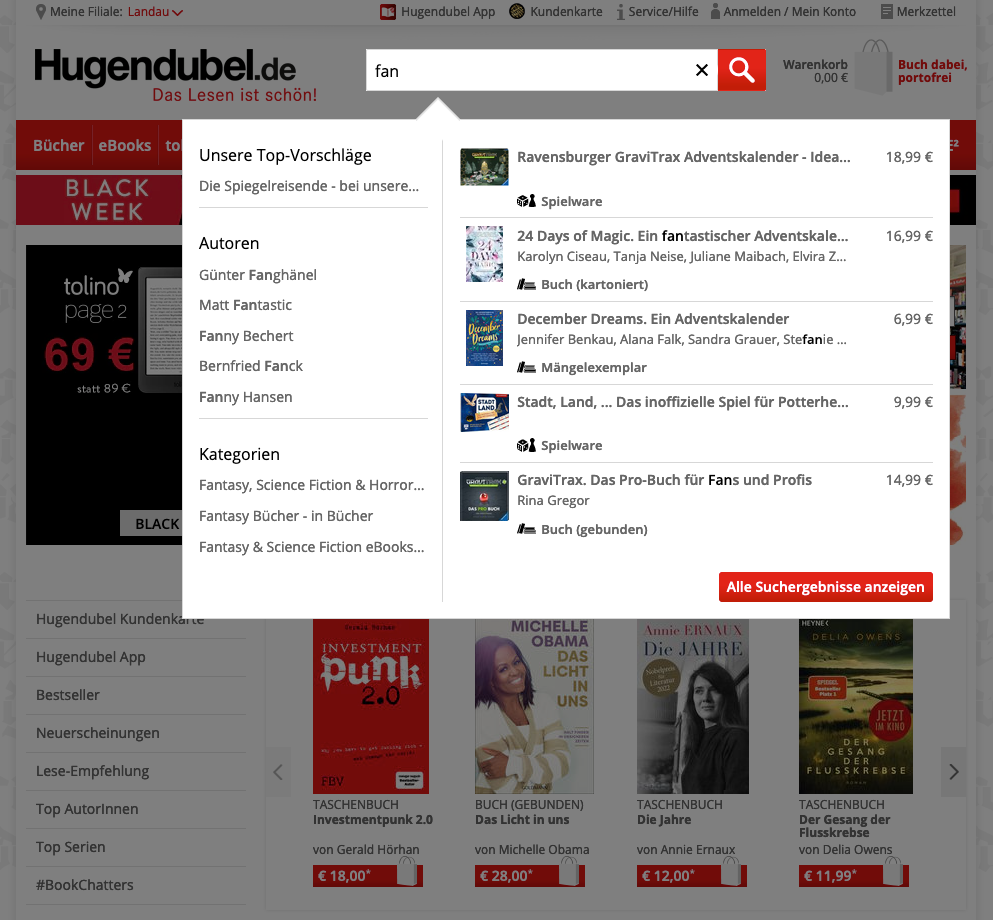

Meaningful autocomplete still missing

We take a look at major German media portals and how they deal with the issue of search and autocomplete. A spoiler in advance: The situation remains sad.

How to "AI" - LLMs as a Commodity

How can companies innovate using AI? How do you go beyond wrapping ChatGPT in a pretty UI?

Man-machine and machine-man

What ticket sellers and Instagrammers have in common, and how AI might cost them both their jobs.

Who reads policies anyway?

The demo of our new product "Quaestio" pits AI and paperwork against each other. It looks like the AI is winning.

Now we suggest something!

At the end of last year, Johannes wrote a blog post about how online magazines can improve user experience by implementing an autocomplete feature. „Proxima“ is our suggested solution in order to provide the best results possible.

5 reasons why ChatGPT is (often) not the solution

The release of GPT-4 is renewing the buzz around Large Language Models. Are these tools ready for prime time now?

Is this the death of all world-changing AI applications?

If an AI sometimes lies to me, is it of any use at all? We examine the areas of application for which lying AI is suitable and where it has no place.

Chat and other GPTs (ChatGPT and its predecessors)

What is ChatGPT capable of? Instead of uncritical applause or doomsday scenarios, a level-headed look at the underlying technologies and the possibilities that arise from them.

Cloud Naive or Cloud Native

We prefer to discuss Text AI and Natural Language Processing, but in this video we'll go over the basics. We talk about scaling, the serverless trend, and cloud-native services - all things you might be interested in.

More value per dollar

We develop products. Whether for ourselves or for customers, we invest the same enthusiasm and have the same quality standards. How we deliver the maximum added value, we describe here.

Helpful at all costs - ChatGPT and the end of the software engineer

ChatGPT may help software developers in some cases, but it won't replace them. It can be helpful to a certain degree, but its eagerness to give wrong answers can be counterproductive. (Automatically generated summary)

May I suggest something?

Web portals should go beyond offering a search function and implement Suggest features to make searching easier, more relevant and more enjoyable. This is possible by providing suggestions in different categories. (Summary generated by NEOMO summarizer)

To search or not to search

We are still not done with search – although we are at it since 2001.

What’s in a name?

Some may claim that my co-founder Florian and I simply enjoy rebranding companies on a regular basis.

There's more where this came from!

Subscribe to our newsletter

If you want to disconnect from the Twitter madness and LinkedIn bubble but still want our content, we are honoured and we got you covered: Our Newsletter will keep you posted on all that is noteworthy.

Please use the form below to subscribe.

Follow us for insights, updates and random rants!

Whenever new content is available or something noteworthy is happening in the industry, we've got you covered.

Follow us on LinkedIn and Twitter to get the news and on YouTube for moving pictures.

Sharing is caring

If you like what we have to contribute, please help us get the word out by activating your own network.